Migrating Streamsets SDC to Apache Nifi January 13, 2022.Hive 3 ACID tables creation using ORC format February 2, 2022.

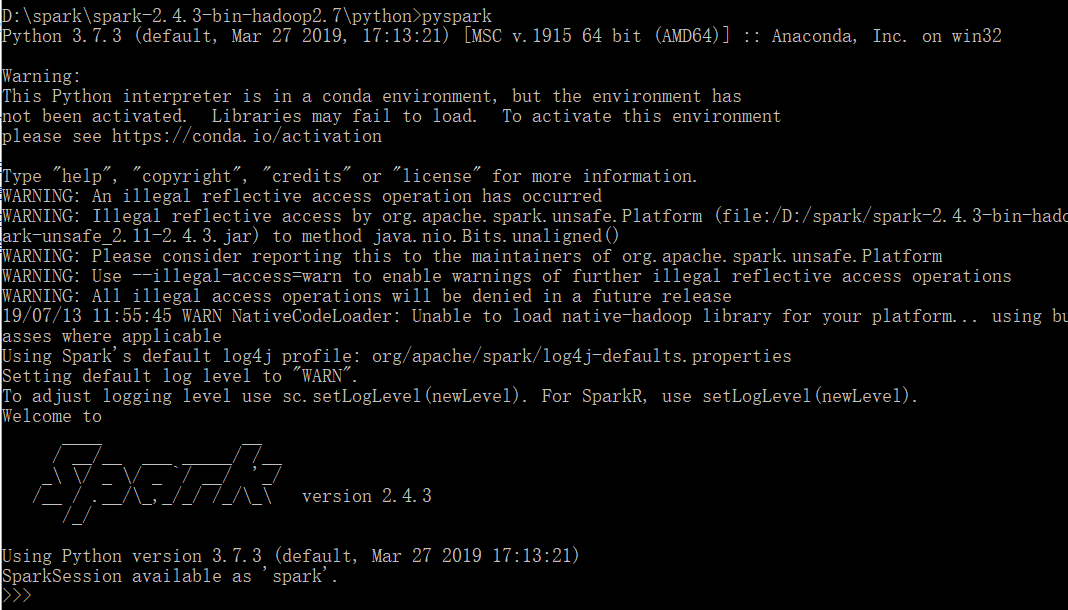

How to install pyspark in anaconda how to#

How to install pyspark in anaconda code#

The code above shows how to connect to the table and get data using Pyspark. Print("Cleanup spark session when done.") SparkDF.limit(10).toPandas().head(3) # spark dataframe can be converted back to pandas SparkDF.show() # Show first rows of dataframe Sqlqry = "SELECT col1, col2, col3 FROM mytestdb.my_test_table limit 5" Print("Reading Hive table using pandas/pyodbc.") Schema = lumns(table='my_test_table')Ĭrsr.execute('SELECT * FROM mytestdb.my_test_table limit 5') #make sure you have predefined the ODBC DSN inside Microsoft ODBC Data Sources #64bit and tested it for connectivity to the ODBC data source like Impala, Hive #or MySQL etc.Ĭonn = nnect('DSN=mypredefinedodbcdsn64bit',autocommit=True) Print("Reading Hive table using pyodbc.") #The old SQLContext and HiveContext are kept for backward compatibility. #NOTE: Since Spark 2.0 SparkSession is now the new entry point of Spark that replaces the old SQLContext and HiveContext. From pyspark import SparkContext, SparkConf, SQLContext

0 kommentar(er)

0 kommentar(er)